Compute requirements for deep learning (DL) and high-performance computing (HPC) are growing at an exponential rate. Many of the most important and valuable workloads in this domain require more flexible and performant compute than traditional processors can provide. Moreover, there is a growing population of converged AI and HPC workloads that are not well-addressed by legacy machines.

In this presentation, Dr. Andy Hock, VP of Product at Cerebras Systems, describes the Cerebras CS-2 system and its application to large-scale HPC and AI workloads. This solution supports not just the largest models of today but extends seamlessly to giant models with more than 100 trillion parameters, models that are impractical to implement today.

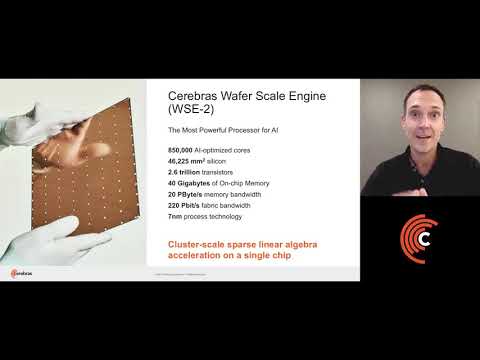

Our wafer-scale accelerator technology features unprecedented compute density, memory and communication bandwidth and is uniquely built for sparse linear algebra. Our co-designed software execution and hardware cluster technology allows users to quickly and easily support enormous workloads that would require thousands of petaflops and take days or weeks to execute on warehouse-sized clusters of legacy, general-purpose processors. We will also review our new software development kit that enables lower-level kernel programming for new or custom HPC and AI application development.

Cerebras Systems builds the ultimate accelerator for AI and HPC workloads. With this kind of horsepower, the possibilities are endless.

Learn more about the Cerebras SDK: https://youtu.be/ZXJzS_LHxcQ

Cerebras @ SC21: https://cerebras.net/blog/sc21

Learn more about Cerebras: https://cerebras.net

#AI #HPC #artificialintelligence #NLP

SC21: Powering Extreme-Scale AI and HPC Compute with Wafer-Scale Accelerators

November 16, 2021

2 views

2 min read

You may also like

7 views

1 min read

AI Hardware, Intel

Understanding Generative AI #151 | Embracing Digital Transformation | Intel Business

7 views

1 min read

AI Hardware, Intel

Training the Next Generation in AI #153 | Embracing Digital Transformation | Intel Business

8 views

1 min read

Categories

- About AI

- Adobe

- AI

- AI ADVANTAGE TUTORIALS

- AI Art

- AI conferences

- Ai Engineer

- AI Explained

- Ai for Beginners

- AI for Daily Life

- AI for Developers

- AI for For Startups

- Ai for Healthcare

- AI in Popular Culture

- AI in Real Life by the BBC

- AI News

- AI Podcasts

- AI Science

- AI Technology

- AI Tools

- AI Use Cases

- Anthropic

- AWS

- ChatGPT Prompting

- Cold Fusion on AI

- Consumer Product Goods (CPG

- Creativity Squared

- Deep Dive into AI

- Dmitry Shapiro

- Eye On AI

- Featured AI Videos

- Foundational Models

- History of AI

- Introduction to AI

- Lex Fridman Podcasts on AI

- Microsoft

- MIT

- Natural Language Processing (NLP

- OpenAI

- Philosophy of AI

- Python

- Responsible AI

- Sequoia Capital AI Ascent

- Skill leap AI

- TED Talks

- The Ai Conference

- Thought Leaders

- University AI

- World Science Festival on AI

All Topics

- About AI255

- Adobe17

- AI135

- AI ADVANTAGE TUTORIALS169

- AI Art45

- AI conferences465

- Ai Engineer38

- AI Explained73

- Ai for Beginners23

- AI For Business15

- AI for Daily Life95

- AI for Developers118

- Ai for Education122

- AI for Family40

- AI for Fintech151

- AI for For Startups38

- Ai for Good28

- Ai for Healthcare113

- Ai for Kids12

- AI for Marketing36

- Ai for Parents6

- Ai for Teens27

- AI Hardware569

- AI in Popular Culture374

- AI in Popular Culture356

- AI in Real Life by the BBC26

- AI Law18

- AI Law & Compliance9

- AI News35

- AI PLATFORMS19

- AI Platforms24

- AI Podcasts197

- AI Science172

- AI Series by Centre for Eye Research14

- AI Smart Automation Conference12

- AI Summit London 202341

- AI Technology20

- AI Tools4

- Aidan Gomez – Cohere28

- Alex Karp – Palantir48

- Alexandr Wang34

- AMD7

- Andrew Ng48

- Anthropic6

- Artificial Intelligence & Machine Learning in Finance36

- Athens Roundtable on AI62

- AWS7

- BERT9

- BrXnd Marketing x AI17

- CALTECH18

- Cerbral Conference26

- Cerebraus72

- ChatGPT for Work Training62

- ChatGPT Prompting14

- Chinese Room Argument – Searle9

- Cohere165

- Cold Fusion on AI1

- Computer Science 188 UC Berkley25

- Computer Vision9

- Consumer Product Goods (CPG72

- Content Summit 202313

- Creativity Squared94

- CS50 Introduction to AI with Python at Harvard8

- CustomGPT.ai57

- Data + AI Summit246

- Data Processing13

- Data Science with ChatGPT by D. Ebbelaar9

- Davos on AI 202417

- Deep Dive into AI424

- DeepMind183

- Demis Hassabis12

- Dmitry Shapiro11

- Dmitry Shapiro8

- Elon Musk28

- Energy61

- Eye On AI262

- Featured AI Videos145

- Generative AI Foundations by AWS7

- Geoffrey Hinton88

- Google386

- Government & Public Services37

- Greg Brockman – OpenAI25

- Hard Problems of Consciousness18

- Harvard University8

- Healthcare Tech by Todd DeAngelis10

- History of AI14

- Hospitality & Tourism6

- House Judiciary on Gen AI & Copywrite8

- Hugging Face87

- Ilya Sustever29

- Intel32

- Introduction to AI62

- Jensen Huang13

- Langchain111

- Learn AI Art38

- Learn AI with IBM18

- Legal Services97

- Lex Fridman Podcasts on AI36

- LlamaIndex52

- MACHINE LEARNING COURSE – MIT21

- Machine Learning Course with Andrew Ng41

- Machine Learning CS22918

- Manufacturing93

- Marc Andreessen17

- Media & Entertainment18

- Meta45

- Microsoft53

- MidJourney8

- Military Readiness in the Age of AI6

- MIT56

- MIT Introduction to Deep Learning | 6.S19163

- MosaicML4

- Natural Language Processing (NLP63

- Neural Network91

- Nodes 202316

- Northwest Medicine Healthcare AI Forum7

- NVIDIA436

- OpenAI9

- Overview of Artificial Intelligence CS221 Standford19

- Philosophy of AI42

- Pincecone69

- Python27

- Python for AI27

- Quantum Computing in AI14

- Real Estate16

- Reinforcement Learning15

- Reinforcement Learning CS23415

- Responsible AI51

- Retail86

- Rise of AI Con 202324

- Runway150

- Self-Supervised Learning47

- Sequoia Capital AI Ascent4

- Skill leap AI67

- Software Development104

- Stable Diffusion16

- Standford AIMI Symposium _ Center for AI & Imaging7

- Standford U212

- Standford University173

- Stanford CS224N: NLP with Deep Learning23

- Stanford XCS224U: NLU I Intro & Evolution of Natural Language Understanding49

- Support Vector Machines (SVM29

- TED Talks40

- Teens in AI Podcast27

- Telecommunications72

- The Ai Conference51

- The AI Hardware Show22

- The Edge Summit33

- The Future of Brands 202333

- The Turing Test20

- Thought Leaders577

- Transformer Architecture40

- Transportation & Logistics31

- University AI213

- University of California, Berkeley53

- University of Cambridge23

- What is it like to be a bat? – Thomas Nagel35

- World Science Festival on AI17

Add comment